Expert insights on synthetic data

The lastest

How synthetic data can help solve AI’s data crisis

As AI demand outpaces the availability of high-quality training data, synthetic data offers a path forward. We unpack how synthetic datasets help teams overcome data scarcity to build production-ready AI.

Blog posts

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Data synthesis

Data privacy

Generative AI

Tonic Structural

Tonic Fabricate

Tonic Textual

Data privacy

Healthcare

Tonic.ai editorial

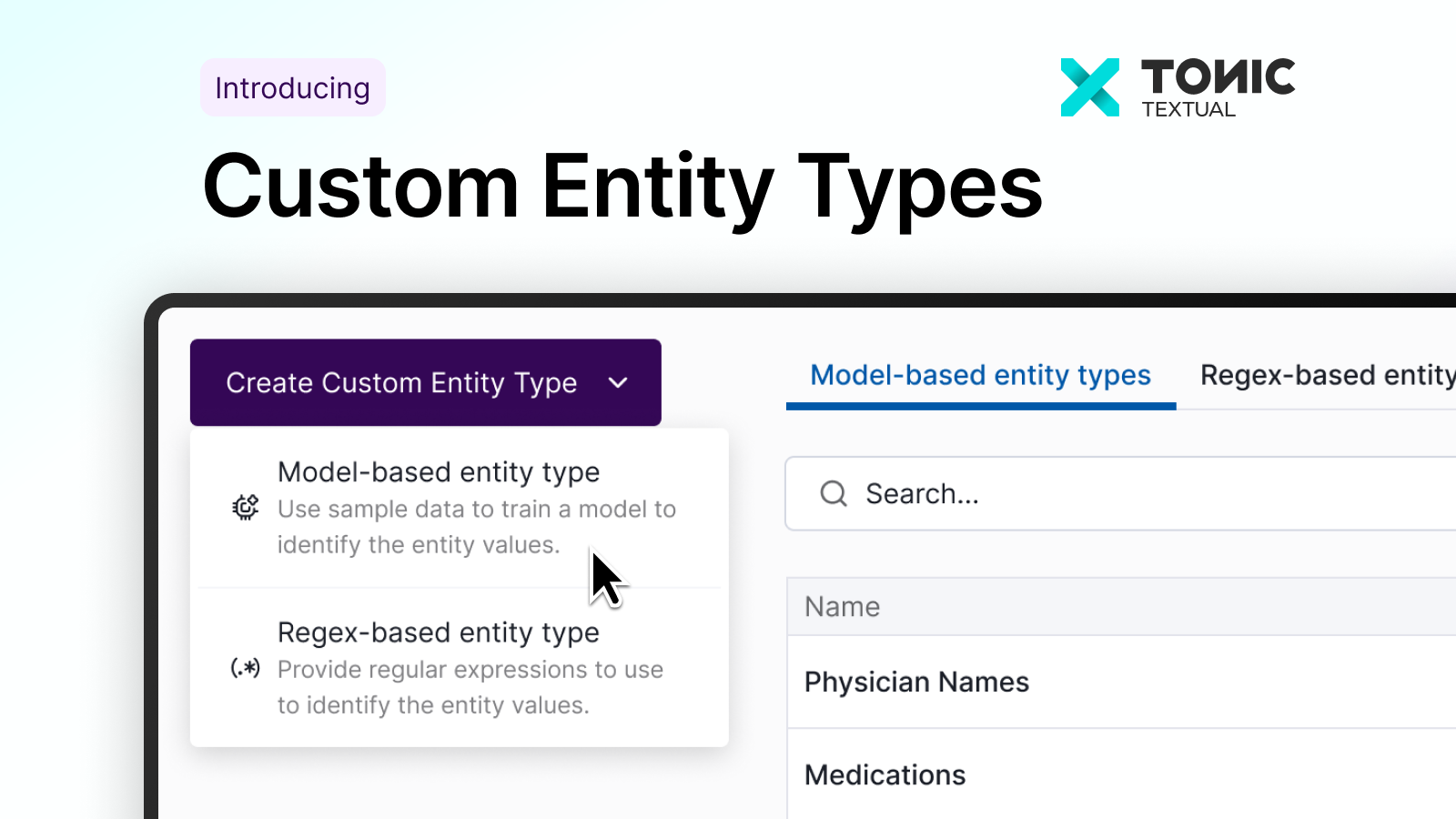

Tonic Textual

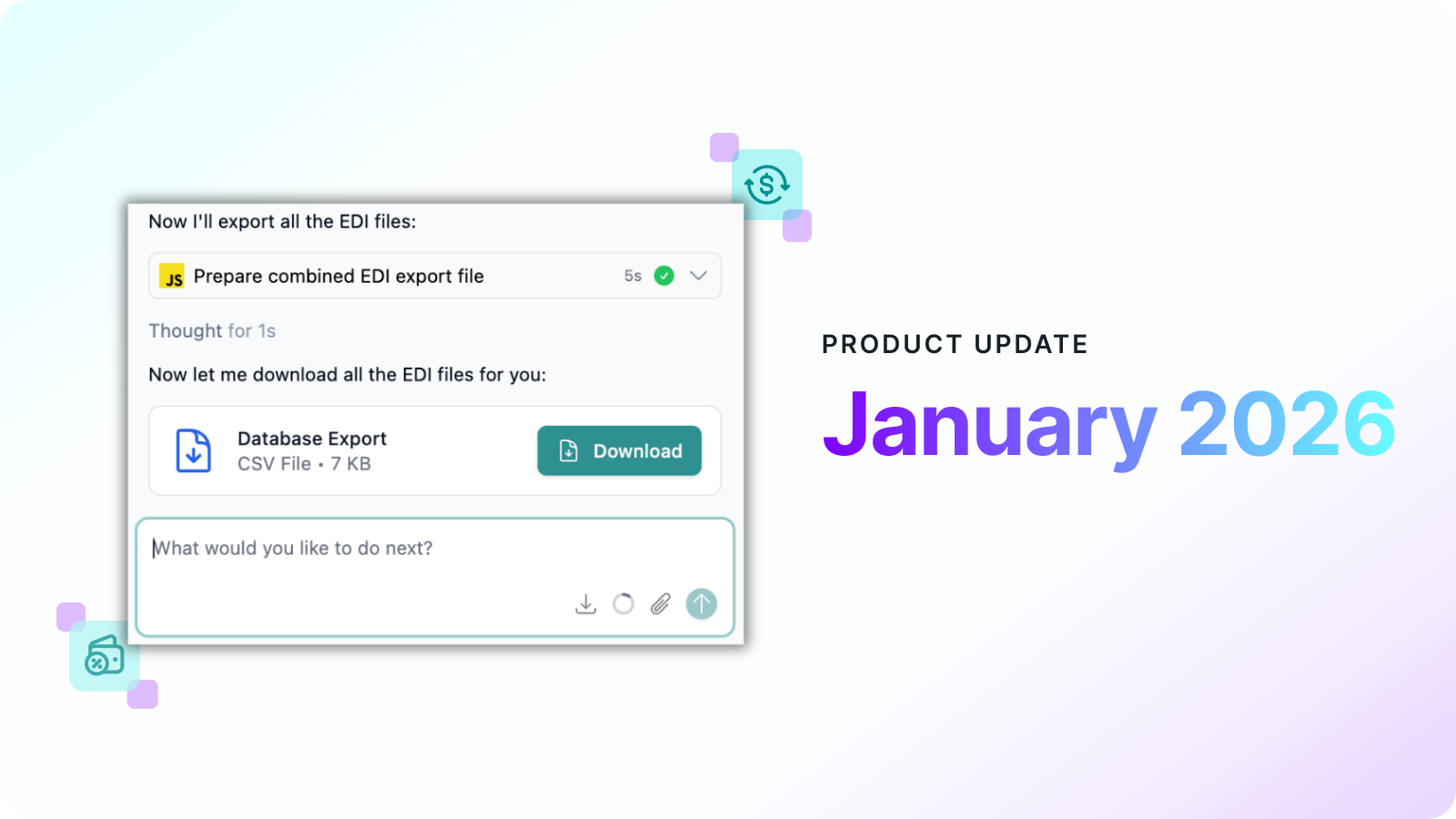

Product updates

Tonic.ai editorial

Tonic Fabricate

Tonic Structural

Tonic Textual

Data de-identification

Data privacy

Healthcare

Tonic Structural

Tonic Textual

Data de-identification

Test data management

Tonic Structural

Tonic Textual

Test data management

Data privacy

Tonic Structural

Tonic Textual

Tonic Fabricate

Data de-identification

Data privacy

Product updates

Tonic Textual

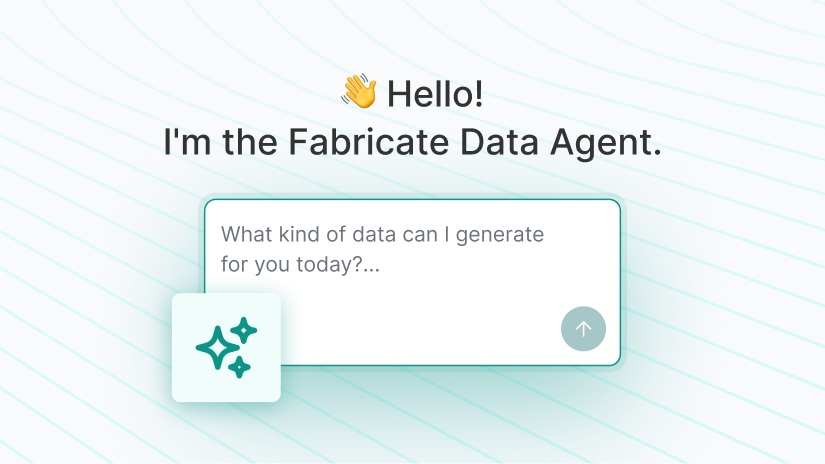

Hyper-realistic synthetic data via agentic AI has arrived. Meet the Fabricate Data Agent.

Product updates

Product updates

Generative AI

Tonic.ai editorial

Data synthesis

Tonic Fabricate

Product updates

Generative AI

Data privacy

Tonic Textual

Product updates

Tonic.ai editorial

Tonic Fabricate

Tonic Structural

Tonic Textual

.svg)

.svg)

.svg)