Defining synthetic test data generation

Synthetic test data generation is the process of creating high-quality data that mimics, but is not directly linked to, actual data used for testing software applications. Simply put, it’s the gold standard for having high quality test data available for development, and for ensuring quality when building software. Why so? Because it allows for rigorous, comprehensive testing without the privacy concerns associated with real customer data, and is much more effective at finding bugs and quality concerns than with test data built by hand.

In the world of software development, testing plays a pivotal role in ensuring the reliability and performance of applications. To do this effectively, you need data; but not just any data — you need data that is representative of actual use cases and scenarios. This is where synthetic test data generation comes into play.

By leveraging sophisticated algorithms and tools, this process generates data that closely resembles the actual data in terms of structure and statistical properties, yet is completely devoid of sensitive, personal information (PII). This makes it an ideal solution for testing environments, especially where privacy is paramount, as it eliminates the risk of data breaches whilst still providing a realistic platform for testing. It is distinct from simply generating test data from scripts — generating synthetic data requires having insight into the statistics, dimensionality, and quality of data that is seen in production.

In addition, the process of synthetic test data generation is not necessarily confined by the limitations of real-world data. It can create diverse scenarios that may not be present in the current data but could potentially occur in the future, allowing an application to scale up to new demands.

In essence, the benefits of generating synthetic test data extend beyond mere compliance with privacy regulations. It offers a practical, efficient, and secure way to conduct necessary testing, ensuring that software applications are robust and reliable.

Types of test data: Real vs. synthetic

Real test data, as the term implies, is genuine data extracted directly from existing databases. It provides a highly accurate reflection of actual scenarios and user interactions, making it extremely valuable for testing. This data includes all the unique irregularities, variables, and anomalies that come with real-world use, offering a best-in-class platform for testing software applications. But the catch? Privacy and data sensitivity. Using real data for testing can potentially expose sensitive user information, which can lead to every security engineer’s nightmare — a privacy breach. Moreover, there are often legal and regulatory restrictions on the use of such data (HIPAA, GDPR, to name a few), further complicating its usage.

Synthetic data for testing, on the other hand, is artificially created data that mimics the characteristics and structure of real data but lacks any association to any real world entities. This type of data generation eliminates any privacy risks associated with real data, making it a safer choice for testing environments. Synthetic data can also be tailored to simulate a wide range of scenarios and use-cases, providing extensive coverage for testing. It offers the possibility of simulating rare but high-impact events that may not be present in the available real data, as well as the ability to scale for predicted use cases.

However, generating synthetic data that accurately represents real-world data is not easy — it requires deep understanding of the data domain, and sophisticated software to ensure high quality data. It is vital that synthetic data maintains the same statistical properties, formats, and quirks as the real data to trust in the quality of your tests. Likewise, predicting future scenarios or rare events also requires careful analysis across all related data sets, often done better with the assistance of intelligent algorithms.

While real data doesn’t require any understanding of tweaking of data to ensure quality tests, it’s a mess of red tape with great risks to users in all industries. At best it requires sophisticated access controls and a poor experience for developers and QA. At its worst, it means privacy leaks and violating legal compliance. Ultimately, we find that synthetic test data allows for effective testing without risks provided an investment in high quality tooling dedicated to the task.

Methods of synthetic test data generation

There are many different methods for generating synthetic test data, ranging from manual creation to automated tools. Manual creation involves generating data by hand or using simple scripts, but as anyone knows who has thrown together a script, it can be time-consuming and lacks scalability due to its maintenance costs. Automated tools, on the other hand, use algorithms to generate large volumes of synthetic data based on predefined parameters. Some data generation methods include data masking, data scrambling, and data fabrication. Data masking and scrambling involve modifying real data to create synthetic data, while data fabrication involves creating completely new data from scratch.

All of these test data generation techniques have a lot of optionality when it comes to implementation, from generative models to data masking to crowdsourcing. These methods allow organizations to create diverse and representative test datasets tailored to their specific needs, whether it's for testing, protecting privacy, or simulating real-world scenarios.

Let’s look more closely at the various methods of synthetic test data generation:

The choice of method depends on your specific requirements and the level of realism needed in the synthetic data. Most often we see a combination of these methods used to create diverse and representative test datasets.

Challenges in test data generation

With some many different techniques and ways to go about creating test data, a multitude of challenges exist that need to be carefully addressed.

Some of the biggest challenges we’ve seen are:

- The realism of synthetic data. Creating artificial data that faithfully mirrors real-world scenarios can be a complex task. Ensuring that the generated data closely matches the distribution, patterns, and anomalies present in actual data is a constant pursuit. Without realistic data, the efficacy of testing and analysis may be compromised.

- Data quality is another paramount concern. Synthetic data may not always capture the quality and nuances found in real data. Inaccuracies or low-quality synthetic data can lead to unreliable results, affecting decision-making and system performance. Maintaining data quality is crucial for the success of any testing or analysis endeavor.

- Diversity in datasets is essential to comprehensively evaluate systems and models. Generating datasets that cover a wide range of scenarios, including edge cases, requires careful planning and consideration. Inadequate diversity can result in incomplete testing and limited insights.

- Data consistency poses another challenge, especially when dealing with different systems and databases. Inconsistent data can lead to compatibility issues and unreliable testing results, further complicating the testing process.

In many ways these problems are often solved in a more effective way when using techniques that rely upon or augment real world data. You’re clearly not building data from scratch, and as such get a lot of the benefits of high quality data for free.

However, privacy considerations also loom large in the realm of test data generation, particularly when using real data as a foundation. Protecting sensitive information while retaining data utility is a delicate balancing act. Properly masking or anonymizing data is crucial to avoid privacy breaches and legal issues. We’ve often found that extensive systems to find private information are necessary to avoid human error when masking data; having automated software purpose built significantly increases the quality of the data while removing the risks associated with augmenting private information.

Using generative methods allow you to use machine learning to understand the relationships of data without needing a data scientist to determine all of its particular qualities. However, there are also challenges unique to these applications:

- Data volume is a concern, especially for applications dealing with big data and machine learning. Generating large volumes of synthetic data can be resource-intensive and time-consuming. Scalability is a significant challenge that organizations must address as data complexity grows.

- Unintentional bias may be introduced during the data generation process, leading to skewed results and inaccurate insights. Recognizing and mitigating bias is crucial to obtain unbiased test data.

- Maintaining synthetic datasets over time is another challenge. As real-world data evolves, synthetic data should be updated to reflect these changes. This ongoing maintenance can be logistically complex, requiring continuous efforts.

- Finally, domain-specific nuances and resource constraints can add layers of complexity to the data generation process. Specialized fields may demand unique considerations, and the computational resources required for high-quality synthetic data can be costly and time-consuming.

Addressing these challenges involves a combination of data generation techniques, careful planning, data validation, and ongoing maintenance. Organizations must navigate these obstacles to ensure that synthetic test data serves its intended purpose effectively in testing, development, and analysis. We’ve found consistently that a combination of different pieces of purpose-built test data generation software and generative models are necessary in order to work around these challenges and serve the highest quality (and lowest risk) data for any given domain.

Accelerate your release cycles and eliminate bugs in production with high-fidelity synthetic data.

Case studies: Using synthetic test data in testing

At Tonic, we’ve seen time and time again how having a strong strategy around creating synthetic, combined with the best tools, leads to real and meaningful results.

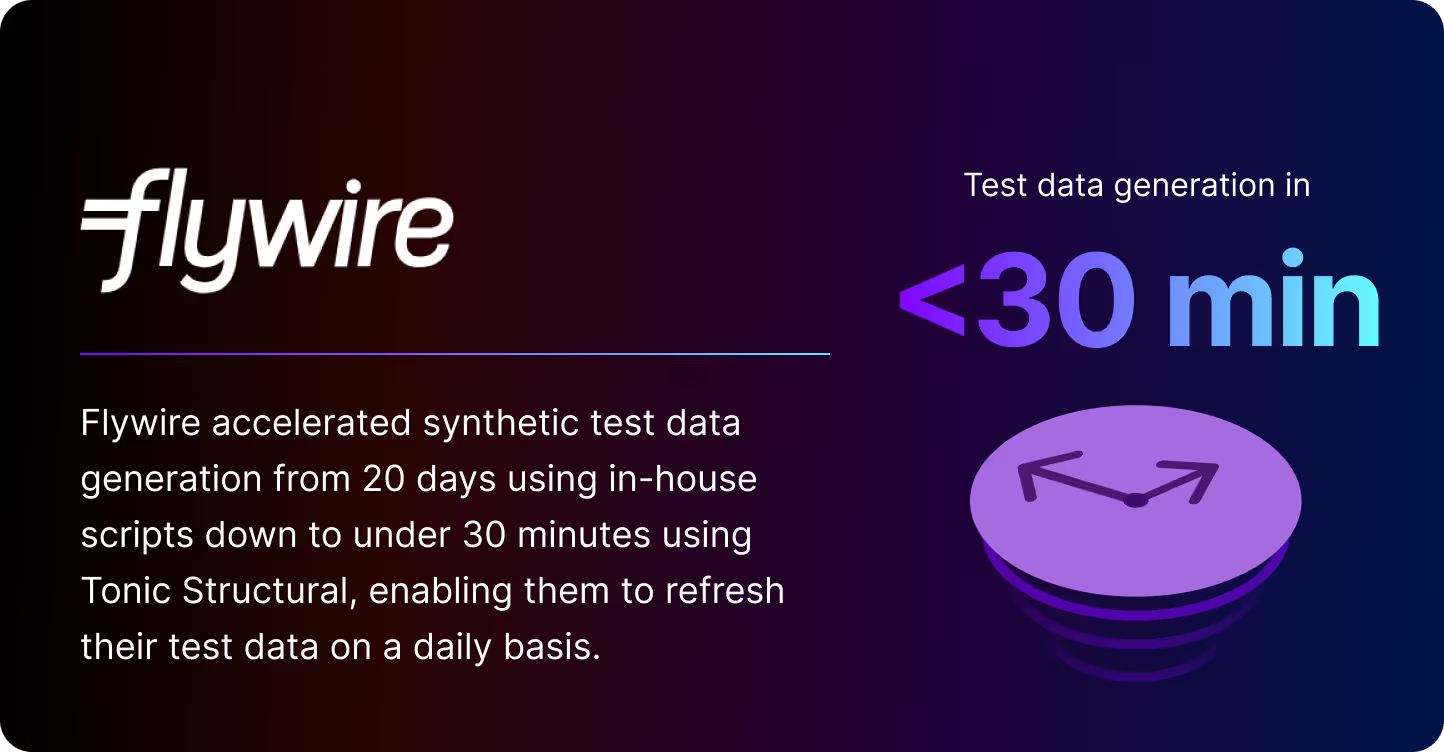

With Flywire, they found themselves challenged by the constraints of HIPAA regulation leading to an initial attempt using in-house scripts to build data from scratch. These scripts were time consuming and difficult to maintain, and creating a synthetic data set would take 40 hours at the minimum — and 20 days for the full dataset! Like many of our customers, they have diverse data sets across many different types of databases along with a complex set of services in AWS that utilized them. By utilizing a specialized tool like Tonic that could generate synthetic data for all of their data sources in a realistic and consistent manner, Flywire can now spin up a new environment in minutes leading to on demand test data environments being built upwards of several times a day.

Flexport is an example where both scale and having 30+ teams using synthetic data makes data generation a challenging engineering problem. As is similar to many other companies, a single in-house tool quickly became outdated, archaic, and insufficient for meeting the goals of engineering teams. By utilizing Tonic, they were able to heavily automate test data generation by running it in line with their schema migration process multiple times a day. Not only is the data consistently up to date with production data, but developers can each have their own on-demand databases to work on and test important features and bug fixes in development.

Paytient very quickly saw that by prioritizing a valuable solution led to significant results. With a large trove of highly sensitive payment and health data, they found building a successful tool was far beyond the capabilities of an engineering team their size, and struggled to have a solution that was beneficial to developers and product support. Using a highly configurable and automated system like Tonic allowed the team to save hundreds of hours, and ultimately return a 3.7x ROI while increasing the quality of life for everyone using the data on a daily basis. In addition, they took a much lower risk of building a system themselves where PII could be more easily introduced into lower environment.

All of these organizations had the same problems: complex data sets, the need for high quality data, privacy and security concerns, and a great deal of complexity that was only solved by using purpose built tools for the problem.

Best practices for synthetic test data generation

At Tonic, we have a lot of practice at getting our customers high quality data for testing while ensuring adherence to privacy regulation in many different domains. Here are our recommended test data generation best practices:

Understand your data: A crucial first step in synthetic data generation is understanding the structure, constraints, and relationships inherent in your real data. Take the time to analyze your existing data thoroughly and identify its key characteristics. This means making investments with database administrators, development groups who work within a specific domain, and optionally data scientists to provide analysis and insight into the data you have present. Having a deep understanding of your real data will enable you to create synthetic data that is a true representation of the real data set's diversity and complexity. However, these often come with high time costs — we have found that great tools can help deliver these insights at the click of a button by analyzing and presenting private information, relationships, and other qualities present. It can save a lot of time.

Use the right tools: The market is flooded with a plethora of tools for synthetic data generation, each with its own unique strengths and capabilities. These range from simple scripts that could be manually executed to sophisticated software that can handle complex data structures and generate large volumes of data. It's important to identify and select the data generation tools that are best suited to your specific needs and capabilities. Whether you need a tool that is user-friendly and easy-to-use, or a more advanced tool that offers greater flexibility and customization, make sure to choose wisely. We have found time and time again that this is not a problem one solves easily without domain specific tools to complete the job — ones that are purpose built and can be automated consistently show better results than trying to take on all of these challenges yourself.

Include edge cases: When generating synthetic data, it can be tempting to focus solely on the 'normal' scenarios that are most likely to occur. However, this approach can leave you unprepared for the rare but potentially significant edge cases. Therefore, when designing your synthetic data, make sure to include these rare scenarios as well. Whether it's an unlikely combination of variables, an unusually high or low value, or a rare sequence of events, including edge cases in your synthetic data will ensure your testing is comprehensive and robust. Using systems to find these edge cases, rather than relying on those familiar with the data has been a much more effective method at minimizing bias in the output data.

Maintain privacy and security: When generating synthetic data, particularly when using real data as a base, it's crucial to ensure that all sensitive information is thoroughly anonymized. This involves not only removing personally identifiable information but also ensuring that the synthetic data cannot be reverse-engineered to reveal any sensitive information. Employ robust data masking and anonymization techniques, and always validate your synthetic data to ensure it maintains the privacy and security of the original data. We consistently believe that you need great PII detection with bulk-application abilities for any data set of even a moderate magnitude.

Continuously update your synthetic data: As your real data evolves over time, so too should your synthetic data. Regularly updating your synthetic data to reflect changes in your real data will ensure that your testing remains relevant and effective. This might involve generating new synthetic data to reflect recent trends, or modifying existing synthetic data to incorporate new variables or scenarios. We have found that having a system that can be automated and with minimal human intervention over time allows for regular, high quality data to be utilized on a consistent basis.

Validate your synthetic data: After generating your synthetic data, don't forget to validate it. More often then not, this is done by simply running the test data through test suites that you’ve already built. Sometimes errors might be because of the software, but other times it could be the test data itself. Running a staging or testing environment can also be helpful for internal users to notice irregularities in the data. This involves checking that the synthetic data accurately reflects the characteristics of the real data, that all privacy and security requirements have been met, and that the synthetic data is fit for its intended purpose.

Synthetic test data tips, in summary

All in all, generating high quality synthetic test data with low risk of exposure to private information is a challenging undertaking. It requires many different methods, each with their own respective challenges, to get data output that is usable and brings a lot of utility to an organization. We have found consistently that using a variety of methods with purpose built tools is the best way to save time and effort while ensuring the highest quality data. Having tools that are easy to automate and work with simplify the investments that have to be made up front, and ultimately lead to the most success for software development (especially in the long run!). With the right tools and capabilities, having high quality synthetic test data can propel an organization forward towards greater efficiency and happiness for everyone who utilizes test data on a regular basis. To learn more about our data synthesis products, connect with our team today.

.svg)

.svg)

.svg)

.svg)