Honey, I shrunk the (Postgres) database

Tonic.ai is a data company. We are building a platform to make it simple to create synthetic data that can be used in lieu of data that contains PII (or PHI). As part of our efforts, we often find it necessary to subset data. Subsetting data is the process of taking a representative sample of your data in a manner that preserves the integrity of your database, e.g., give me 5% of my users. If you do this naively, your database will break foreign key constraints or you’ll end up with a statistically non-representative data sample. Here are a few situations in which you might find subsetting data to be important or necessary:

- You’d like to use your production database in staging or test environments (sans the PII) but the database is very large so you want to use only a portion of it.

- You’d like a test database that contains a few specific rows from production (and related rows from other tables) so you can reproduce a bug.

- You want to share data with others but you don’t want them to have all of it.

To help you get started with subsetting, we are open-sourcing our own homegrown subsetting tool. The project is called Condenser, and you can find it on github. Before we dive into the code, however, we’ll give a quick overview of the problem and show you some of the do’s and don’ts of subsetting, as well as show you the techniques we’ve implemented in Condenser.

A Quick Overview

As mentioned previously, subsetting a database means to take a subset of its rows, across multiple tables, while still maintaining all of the constraints of the original database. Subsetting a database can be desirable for many reasons. One common use-case is to scale down a production database to a more reasonable size so that it can be used in staging, test, and development environments. This can be done to save costs and, when used in tandem with PII removal, can be quite powerful as a productivity enhancer. Another example is copying specific rows from one database and placing them into another while maintaining referential integrity.

In this article, we will discuss in detail how to accomplish the first use case. Specifically, we’ll show you how to scale a database down to a desired percentage of its original size, i.e., to return a new database containing only 5% of the users in production.

Existing Solutions & Our Goal

Oracle, Informatica, and Computer Associates are all happy to sell you data subsetting tools. It’s hard to learn much about these solutions, as they’re closed source and online documentation is pretty light. Also, they frequently only work with their proprietary database technology. If you’re a small team working with lightweight open-source tools (e.g., Postgres or MySQL), these solutions are heavy-handed and out of reach.

The only open-source solution we found was Jailer. Jailer has been around for a while and is actively maintained. You can get really precise with how you want to subset your database with Jailer, but configuring Jailer for our needs was challenging. Our clients’ databases have hundreds of tables and multiple, cyclic foreign key relationships among the tables. Jailer’s precision comes with a configuration cost.

Our goal was to build a database subsetter with minimal configuration parameters for databases with arbitrarily complex foreign key relationships that include cycles.

Subsetting an Entire Database

Below is a database containing six tables. Arrows denote a foreign key relationship from parent to child, e.g., the Users table has a foreign key reference to the Meta table.

A Naive Solution and Its Failure Modes

Let’s say we desire to subset this database to 5% of the users in production. We can start by sampling the User’s table directly, perhaps with a SQL query similar to this one:

and then copying the result set to our new database.

Now, we must copy over any rows from Meta which were referenced by User. We can find these rows by querying our new database with:

where * denotes a table in our subset database. We can use this result set to obtain the required rows from Meta.

At this point, we could stop. We have 5% of Users and all of the necessary rows from Meta to not create any foreign key constraint violations.

This new database, however, won’t be very interesting or useful. For example, even though we have 5% of Users, we don’t have any of those users’ Events. If your goal is to use the new database in a development environment where your front-end/back-end requires the other tables, which are currently empty, you’ll find it lacking.

A Solution

How can we include the other tables? We need a methodology for visiting tables in our databases and adding the necessary rows to avoid foreign key constraint violations. This methodology must work, regardless of the database topology.

A Robust Solution with Topological Sorting

A topological sort is one such methodology. This sorting algorithm ensures that each node of a graph is only visited once its children have been visited. In the context of our problem, it ensures that a table is only visited once the tables it references have also been visited.

A topological sort, however, doesn’t exist for graphs with cyclic dependencies. For now, we’ll focus on graphs that are acyclic; later we’ll provide some details on how to handle cycles, as well.

For the above database, our topological sort would look something like this:

{Meta, Figs}, {User, Facts}, {Events}, {Data}

The order is read left to right, and tables inside of {} can be swapped around and still maintain the validity of the result. For example, the above representation actually represents four unique topological sorts. Meta and Figs can be swapped, and Users and Facts can also be swapped. Therefore,

Meta, Figs, Users, Facts, Events, Data

Meta, Figs, Facts, Users, Events, Data

Figs, Meta, Facts, Users, Events, Data

Figs, Meta, Users, Facts, Events, Data

are all valid, whereas swapping Events with Facts would not be valid. Another way to rationalize this is by seeing that both User and Facts depend on Meta and Figs, respectively, so Meta and Figs must be done first but their respective order does not matter.

The Core Algorithm

Start by choosing a desired end result. Let’s say, for example, that we desire a new database that contains P percent of the rows in the production database’s table 𝗧.

1. Determine the topological sort for your database, based on foreign key constraints.

2. Reverse this topological order. This is now the order in which we will visit tables. We represent this order as:

𝕋 = [𝕋0, 𝕋1, 𝕋2, …] where each 𝕋i is the set of tables whose order is equivalent. For example, in the above topological sort 𝕋0 = {Data}.

3. The tables in 𝕋0 are ancestors of all other tables in the database. Sample each table in this first group at L percent of its rows in production.

Note: There is no guarantee that table 𝗧, the table we want to sample, will be found in the group 𝕋0. That is ok, though. Don’t fret.

4. Iterate through all other Table groups [𝕋1, 𝕋2, …] and further through each table in each group, then apply the following steps to each table, which we call ‘t’

- Find all tables that reference t. By the nature of our reversed topological sort, this set of tables will have already been visited and their subset will already exist in the new database.

- For each referencing table, collect all foreign key values to t from the destination database.

- Take the combined list of foreign key ids and grab all rows from the original database in table t whose primary key is in the collection.

- Insert these rows into the destination database.

We now have a new database containing a subset of the original database which maintains referential integrity. However, we have no guarantee that our desired result has been met, namely that table 𝗧 has been subsetted to P percent.

5. At this point, we compute the rows in table 𝗧 to determine how close we are to our desired result, P. If we call the actual result P′, then we can attempt to minimize the difference between P and P′, and our problem becomes finding a root to the equation:

f(L) = P - P′(L)

We use a root solving algorithm to vary L until we arrive at a P′ that is sufficiently close to P to meet our required tolerance.

Handling Cyclic Dependencies

The algorithm above can’t handle cyclic dependencies because topological sorts don’t exist for graphs with cycles. So how can we handle them? There isn’t a single obvious answer, so we’ll discuss a few approaches, their merits, and ultimately what we went with. But before we do that, let’s talk conceptually about what a cycle in the dependency graph means.

A cycle in the graph tells us that, for the tables that participate in the cycle, rows in those tables depend on other rows in the same table. The simplest case of a cycle is a table depending on itself; for example, the Users table may have a Referrer column that references another row in the Users table—the user that referred a given user. A more complex example involves more than one table: User has a Country_id column referencing the Country table, the Country table references the Language table through its Language_id column, and the Language table references the User table through its Moderator_id column. This example illustrates a database where the User table contains some users that are also community moderators, one moderator for each language.

Dropping Topological Sort

One way of handling cycles is to abandon topological sort. Instead, we treat the graph itself as the guide to subsetting. Start with the target table, and subset it according to the goal subsetting. Then follow the graph in a depth-first traversal, adding rows to tables according to the requirements imposed by the tables already visited. The algorithm ends when it stops adding additional rows, i.e. you’ve reached the transitive closure of the rows included in the first step. (This brief description doesn’t really explain the approach fully, but if we go too deep this post will never end. Hopefully, you get the idea.)

The problem with this approach, as you might guess, is that when a cycle is present, the transitive closure can get pretty large. And in fact, for our production test case, we found that when we target even a small portion (1%) of the starting dataset, we devolved into importing a large portion of the database (50%). This won’t always be the case—it’s very dependent on database structure—but it’s enough to disqualify this approach for us.

Dropping the Cycle

An alternative to abandoning topological sort is to abandon the cycle. To remove the cycle, we need to drop a foreign key reference between tables that are part of the cycle. The simplest way to do this is to replace the foreign key column with NULLs. Any cycle of foreign key columns will contain at least one NULLable column, otherwise you wouldn’t be able to insert the first row into the cycle. So while this may seem drastic, the schema will allow it.

By dropping the cycle, we can revert back to our original algorithm, using topological sort. This has the advantage of not producing large transitive closures and therefore running quickly and predictably. Of course, the price you pay is losing a foreign key relationship in your database. In practice you can recover some of that by performing a final pass where you import as much of the dropped column as you can, after the database has been subsetted. This means essentially keeping whatever foreign keys from the dropped column that were selected in the subset of the foreign table. Drawing from the cyclic example above, this means some Languages will have a non-NULL Moderator_id because those Users were part of the selected subset, but it’s very likely that not all Languages will be so lucky (depending on how much of the DB you imported).

All things considered, for our use cases, the predictability of this approach makes it a winner. And it’s what we use in the example below.

Supporting Passthrough Tables

A passthrough table is a table in which we maintain all rows from the original database in the subsetted database. Passthrough tables are useful for a number of reasons. For example, the table might contain API tokens or config values that are needed by your back-end system.

In order to support passthrough tables, we added some additional logic to Step 4 in the above algorithm. Prior to step 4.i, we first check if the table t is a passthrough table. If it is, we skip steps 4.i through 4.iv and, instead, copy the entire table from the source directly to the destination database. Tables downstream of the passthrough table in the topological sort are handled following the standard algorithm above. One potential hazard here is that if you treat a large table as passthrough, you can end up downloading a large set of other tables downstream of the passthrough table.

Using Condenser for Rapid Subsetting

We are going to use Condenser to solve a subsetting problem on a real production database. This data comes from one of our clients and is used in a real world production setup, however, we’ve changed the names of the tables to protect our customer’s IP and identity.

This is a complicated database that contains ~180 tables. We’ll focus, however, on a select set of 19 tables. These tables are special in that they form a closed group that doesn’t reference any other table outside of themselves. At a high level, this group of tables focuses on a user-driven event process. The group of tables is shown below.

Condenser is a config-driven CLI tool. Let’s start building our configuration file. We start by determining our desired end result which, in this case, is to subset the users table to 1% of the original. In Condenser, that would be written as:

This database also has a few tables that are needed by the back-end system and cannot be subsetted. We specify these passthrough tables as such:

Additionally, many database systems have hundreds of tables, and it can often be difficult to list all passthrough tables. We therefore also have the notion of a passthrough_threshold, which is an integer field that tells the system to treat any table with fewer rows than the threshold as a passthrough table as well.

tells us to consider any table under 100 rows as a passthrough table.

Putting it all together, we have:

Before running Condenser, we must specify the connection information for both the source and destination databases. This is done through the .source_db_connection_info and .destination_db_connection_info files. A sample is given below for destination. The source file should have the same fields:

Note that there is also an optional “password” field. You can specify it in the file if you wish, otherwise, you will be prompted to provide it each time you run Condenser.

When Condenser runs, it will perform the following steps:

- Grabs all schema information of the source table (via psql –schema-only)

- Applies schema (sans all constraints) to the destination database

- Runs subsetting algorithm

- Applies all constraints to the destination database, and verifies there are no violations

Results

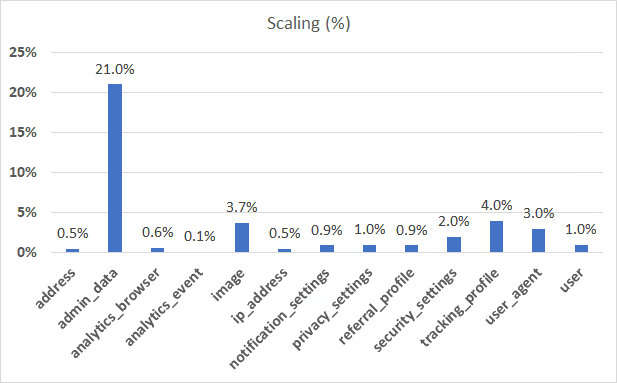

Running this result on nearly five million rows of data takes only a few minutes. The end result is a new DB containing only the subsetted data. The chart below gives a breakdown for each table of what percentage of rows were kept from the original table.

Note that the User table, which we required to be at 1%, is fixed to 1%, whereas the other tables vary in how much they were scaled. Also note that passthrough tables are not shown above.

Conclusion

We hope this post was useful and that you learned a few things about subsetting that you didn’t know before. If you need help applying this algorithm to one of your existing production databases, definitely reach out and we’ll be happy to assist.

Also, we have plans to take Condenser further. Here are a few things we’ll be doing next:

- Support a form of subsetting in which specific rows are kept, as opposed to requiring a percentage, e.g., create a database where Users with primary keys 5483, 3218, 9874 are included in end result.

- Allow support for sampling on multiple tables (or all tables), e.g., create a database with 5% of users and 10% of events, or create a database that keeps 15% of the total number of rows.

- Support additional databases. Currently, we support Postgres but we would like to include support for additional databases, such as MySQL.

- Support additional database capabilities, such as compound keys, non-integer primary keys, etc.

If you’re excited about database subsetting, download the tool and give it a whirl. Contributions are welcome, and if you need help getting started, just leave a message on the Github issues page.

Tonic’s mission is to make it easy to create high-quality synthetic replicas of sensitive data. Subsetting is just one tool in our tool box.

If you’d like to learn more about creating synthetic data, shoot us an email at hello@tonic.ai.

Adam Kamor, Co-founder and Head of Engineering at Tonic.ai, leads the development of synthetic data solutions that enable AI and development teams to unlock data safely, efficiently, and at scale. With a Ph.D. in Physics from Georgia Tech, Adam has dedicated his career to the intersection of data privacy, AI, and software engineering, having built developer tools, analytics platforms, and AI validation frameworks at companies such as Microsoft, Kabbage, and Tableau. He thrives on solving complex data challenges, transforming raw, unstructured enterprise data into high-quality fuel for AI & ML model training, to ultimately make life easier for developers, analysts, and AI teams.

.svg)

.svg)

.svg)